The graphic content of some of the posts reprinted within this article may offend some readers. However, our belief is that readers cannot fully understand the importance of how hate speech is handled without seeing it unvarnished and unredacted.

![]()

Facebook’s community standards prohibit violent threats against people based on their religious practices. So when ProPublica reader Holly West saw this graphic Facebook post declaring that “the only good Muslim is a fucking dead one,” she flagged it as hate speech using the social network’s reporting system.

Facebook declared the photo to be acceptable. The company sent West an automated message stating: “We looked over the photo, and though it doesn’t go against one of our specific Community Standards, we understand that it may still be offensive to you and others.”

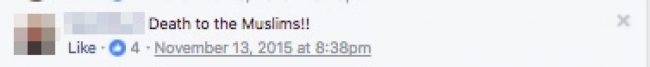

But Facebook took down a terser anti-Muslim comment — a single line declaring “Death to the Muslims,” without an accompanying image — after users repeatedly reported it.

Both posts were violations of Facebook’s policies against hate speech. But only one of them was caught by Facebook’s army of 7,500 censors — known as content reviewers — who decide whether to allow or remove posts flagged by its 2 billion users. After being contacted by ProPublica, Facebook also took down the one West complained about.

Such inconsistent Facebook rulings are not unusual, ProPublica has found in an analysis of more than 900 posts submitted to us as part of a crowd-sourced investigation into how the world’s largest social network implements its hate-speech rules. Based on this small fraction of Facebook posts, its content reviewers often make different calls on items with similar content, and don’t always abide by the company’s complex guidelines. Even when they do follow the rules, racist or sexist language may survive scrutiny because it is not sufficiently derogatory or violent to meet Facebook’s definition of hate speech.

Such inconsistent Facebook rulings are not unusual, ProPublica has found in an analysis of more than 900 posts submitted to us as part of a crowd-sourced investigation into how the world’s largest social network implements its hate-speech rules. Based on this small fraction of Facebook posts, its content reviewers often make different calls on items with similar content, and don’t always abide by the company’s complex guidelines. Even when they do follow the rules, racist or sexist language may survive scrutiny because it is not sufficiently derogatory or violent to meet Facebook’s definition of hate speech.

We asked Facebook to explain its decisions on a sample of 49 items, sent in by people who maintained that content reviewers had erred, mostly by leaving hate speech up, or in a few instances by deleting legitimate expression. In 22 cases, Facebook said its reviewers had made a mistake. In 19, it defended the rulings. In six cases, Facebook said the content did violate its rules but its reviewers had not actually judged it one way or the other because users had not flagged it correctly, or the author had deleted it. In the other two cases, it said it didn’t have enough information to respond.

“We’re sorry for the mistakes we have made — they do not reflect the community we want to help build,” Facebook Vice President Justin Osofsky said in a statement. “We must do better.” He said Facebook will double the size of its safety and security team, which includes content reviewers and other employees, to 20,000 people in 2018, in an effort to enforce its rules better.

He added that Facebook deletes about 66,000 posts reported as hate speech each week, but that not everything offensive qualifies as hate speech. “Our policies allow content that may be controversial and at times even distasteful, but it does not cross the line into hate speech,” he said. “This may include criticism of public figures, religions, professions, and political ideologies.”

In several instances, Facebook ignored repeated requests by users to delete hateful content that violated its guidelines. At least a dozen people, as well as the Anti-Defamation League in 2012, lodged protests with Facebook to no avail about a page called Jewish Ritual Murder. However, after ProPublica asked Facebook about the page, it was taken down.

In several instances, Facebook ignored repeated requests by users to delete hateful content that violated its guidelines. At least a dozen people, as well as the Anti-Defamation League in 2012, lodged protests with Facebook to no avail about a page called Jewish Ritual Murder. However, after ProPublica asked Facebook about the page, it was taken down.

Facebook’s guidelines are very literal in defining a hateful attack, which means that posts expressing bias against a specific group but lacking explicitly hostile or demeaning language often stay up, even if they use sarcasm, mockery or ridicule to convey the same message. Because Facebook tries to write policies that can be applied consistently across regions and cultures, its guidelines are sometimes blunter than it would like, a company spokesperson said.

Consider this photo of a black man missing a tooth and wearing a Kentucky Fried Chicken bucket on his head. The caption states: ”Yeah, we needs to be spending dat money on food stamps wheres we can gets mo water melen an fried chicken.”

ProPublica reader Angie Johnson reported the image to Facebook and was told it didn’t violate their rules. When we asked for clarification, Facebook said the image and text were okay because they didn’t include a specific attack on a protected group.

By comparison, a ProPublica reader, who asked not to be named, shared with us a post about race in which she expressed exasperation with racial inequality in America by saying, “White people are the fucking most.” Her comment was taken down by Facebook soon after it was published.

How Facebook handles such speech is important because hate groups use the world’s largest social network to attract followers and organize demonstrations. After the white supremacist rally in Charlottesville, Virginia, this summer, CEO Mark Zuckerberg pledged to step up monitoring of posts celebrating “hate crimes or acts of terrorism.” Yet some activists for civil rights and women’s rights end up in “Facebook jail,” while pages run by groups listed as hateful by the Southern Poverty Law Center are decked out with verification checkmarks and donation buttons.

How Facebook handles such speech is important because hate groups use the world’s largest social network to attract followers and organize demonstrations. After the white supremacist rally in Charlottesville, Virginia, this summer, CEO Mark Zuckerberg pledged to step up monitoring of posts celebrating “hate crimes or acts of terrorism.” Yet some activists for civil rights and women’s rights end up in “Facebook jail,” while pages run by groups listed as hateful by the Southern Poverty Law Center are decked out with verification checkmarks and donation buttons.

In June, ProPublica reported on the secret rules that Facebook’s content reviewers use to decide which groups are “protected” from hate speech. We revealed that the rules protected “white men” but not “black children” because “age,” unlike race and gender, was not a protected category. (In response to our article, Facebook added the category of “age” to its protected characteristics.) However, since subgroups are not protected, an attack on poor children, beautiful women, or Indian taxi cab drivers would still be considered acceptable.

Facebook defines seven types of “attacks” that it considers hate speech: calls for exclusion, calls for violence, calls for segregation, degrading generalization, dismissing, cursing and slurs.

For users who want to contest Facebook’s rulings, the company offers little recourse. Users can provide feedback on decisions they don’t like, but there is no formal appeals process.

Of the hundreds of readers who submitted posts to ProPublica, only one said Facebook reversed a decision in response to feedback. Grammy-winning musician Janis Ian was banned from posting on Facebook for several days for violating community standards after she posted a photo of a man with a swastika tattooed on the back of his head — even though the text overlaid on the photo urged people to speak out against a Nazi rally. Facebook also removed the post.

A group of her fans protested her punishment, and some reached out to their contacts in Silicon Valley. Shortly afterwards, the company reversed itself, restoring the post and Ian’s access. “A member of our team accidentally removed something you posted on Facebook,” it wrote to Ian. “This was a mistake, and we sincerely apologize for this error. We’ve since restored the content, and you should now be able to see it.”

A group of her fans protested her punishment, and some reached out to their contacts in Silicon Valley. Shortly afterwards, the company reversed itself, restoring the post and Ian’s access. “A member of our team accidentally removed something you posted on Facebook,” it wrote to Ian. “This was a mistake, and we sincerely apologize for this error. We’ve since restored the content, and you should now be able to see it.”

“Here’s the frustrating thing for me as someone who uses Facebook: when you try to find out what the community standards are, there’s no place to go. They change them willy-nilly whenever there’s controversy,” Ian said. “They’ve made themselves so inaccessible.”

Without an appeals process, some Facebook users have banded together to flag the same offensive posts repeatedly, in the hope that a higher volume of reports will finally reach a sympathetic moderator. Annie Ramsey, a feminist activist, founded a group called “Double Standards” to mobilize members against disturbing speech about women. Members post egregious examples to the private group, such as this image of a woman in a shopping cart, as if she were merchandise.

Facebook’s rules prohibit dehumanization and bullying. Ramsey’s group repeatedly complained about the image but was told it didn’t violate community standards.

When we brought this example to Facebook, the company defended its decision. Although its rules prohibit content that depicts, celebrates or jokes about non-consensual sexual touching, Facebook said, this image did not contain enough context to demonstrate non-consensual sexual touching.

Ramsey’s group had more luck with another picture of a woman in a shopping cart, this time with the caption, “Returned my defective sandwich-maker to Wal-Mart.” The group repeatedly flagged this post en masse, and eventually it got taken down. The difference may have been that the woman in this image was bloodied, suggesting she was the victim of a sexual assault. Facebook’s guidelines call for removing images that mock the victims of rape or non-consensual sexual touching, hate crimes or other serious physical injuries, the spokesperson said.

Facebook said it takes steps to prevent mass reporting, a tactic used not only by “Double Standards” but by other advocacy groups, from influencing decisions. It uses automation to recognize duplicate reports, and caps the number of times it reviews a single post, according to a Facebook official.

Facebook said it takes steps to prevent mass reporting, a tactic used not only by “Double Standards” but by other advocacy groups, from influencing decisions. It uses automation to recognize duplicate reports, and caps the number of times it reviews a single post, according to a Facebook official.

Members of Ramsey’s group have run afoul of Facebook’s rules for what they consider candid discussion of gender issues. Facebook took down a post by one member, Charro Sebring, which said, “Men really are trash.”

Facebook defended its decision to remove what it called a “gender-based attack.”

Facebook banned Ramsey herself from posting on Facebook for 30 days. Her offense was reposting a suggestive image on another Facebook user’s page of a sleeping woman and a string of comments calling for rape. Ramsey added the caption: “Women don’t make memes or comments like this #NameTheProblem”

Facebook restored Ramsey’s post after ProPublica brought it to the company’s attention. The content as a whole didn’t violate the guidelines because the caption attached to the photo condemned sexual violence, the spokesperson said.

Facebook’s about-face didn’t mollify Ramsey. “They give you a little place to provide ‘feedback’ about your experience,” she said. “I give feedback every time in capital letters: YOU’RE BANNING THE WRONG PEOPLE. It makes me want to shove my head into a wall.”

–Ariana Tobin, Madeleine Varner and Julia Angwin, ProPublica

woody says

Wow here’s a thought don’t go on face book,problem solved.Whaaaa.

Richard says

Facebook is and has been detrimental to the human race, physically, mentally and emotionally. Glad that I never succumb to the useless waste of time and all the frenzy associated with its use.

Pogo says

@The 1st Amendment first

I sympathize with those who battle hate speech, but I live in a country where soulless corporations have the same rights to speech as a natural person – thanks to the long traditions of the SCOTUS:

We Have a Supreme Court That Comforts the Comfortable and Afflicts the Afflicted

http://www.truth-out.org/progressivepicks/item/30266-we-have-a-supreme-court-that-comforts-the-comfortable-and-afflicts-the-afflicted

Hate radio stars live like sultans with the money corporate sponsors pay to them to pour their pernicious nonsense into the gaping holes in the universe that listen to them – which they (Rush, Coulter, Fox) defend as humor when they’re called to account. The joke there is that they’re not joking, never were, and damn well know it too.

I dread the day when I’m not allowed to pierce the balloons of pious hot air that hold them afloat. Indeed, I call it a duty – and others have too:

protect the afflicted and afflict the comfortable

https://www.google.com/search?q=protect+the+afflicted+and+afflict+the+comfortable&ie=utf-8&oe=utf-8&client=firefox-b-1

Today in Media History: Mr. Dooley: ‘The job of the newspaper is to comfort the afflicted and afflict the comfortable’

By David Shedden · October 7, 2014

https://www.poynter.org/news/today-media-history-mr-dooley-job-newspaper-comfort-afflicted-and-afflict-comfortable

(Press pg dn to take you to the best video you’re likely to see today)

Concerned Citizen says

The thing about the internet these days is it allows for a whole generation of keyboard warriors. Social media especially since Facebook allows fake profiles which allow you to hide in anonymity.

Trolls whether they are state sponsored or private citizens feel empowered and say things on the screen they would never say in person or public.

Cyber bullying has become rampant and unfortunately there are little resources thrown at it from the law enforcement community..

If folks are so concerned about how the net is run I.E Net Neutrality they should refocus concerns and get laws passed to help curb some of this nonsense out there.

People like to throw the whole free speech thing out there. While it might be true to a point The Freedom Of Speech does not absolve you of responsibility.

If your words cause harm to someone and they can track you down you could and should be held responsible and punished accordingly.

Jogging Snowbird says

Complain, complain and complain. Thats all anyone does anymore. How about people mind thier own business and go on with thier lives. The world is turning into one big complaining cry baby. Grow up, and get over what other people say, everyone has an opinion and it will always be that way. You can’t sensor everything. Get a life

Brad W says

This is a very important topic and one that I think is very misunderstood. For the most part people continue to see “online” as something separate from “offline”. The reality is that line went away a long time ago. The erasing of that line came with real-name profiles. So to better understand internet-based social networks today, for the first time we can truly look at offline as well.

The fact is that these social networks provide us a glimpse into the world as we have never seen it before. These aren’t behaviors or thoughts of someone just because they are online. These behaviors and comments provide a glimpse of who the person actually is online and offline.

So what does that have to do with “enforcement”? To answer that we look to offline. How does society respond typically offline? It typically rejects it and rejects the individual. In essence, the community moderates in many ways. For example, the previous comments that were on this post and have since disappeared gave a glimpse into a young woman who is an investment coach in the Daytona Beach area. Based upon her comments I would not do business with her or recommend her. A local real estate agent engaged recently in what is best described as a racist dialogue in a conversation on an individuals profile. That resulted in the agent receiving poor reviews on their business page with screenshots of their comments. This is no different than if the person would have made the same comments offline and was overheard.

The real problem in my opinion is fear of influence in the online world and not “rule enforcement”. The idea that if people see it online they will be influenced by it, change their mind, and behave differently. When KKK flyers show up on driveways in our neighborhoods does it cause a mass group of people wanting to join and become racists? No. Society finds it repugnant and rejects it and those who would sign up. Where the real effort and discussion needs to be in my opinion is education regarding how to decipher what is legitimate sources online or not. You will always have those that hate wanting to flood channels with their message, but just like always the more that is rejected the quicker it fizzles out.

Challenge individuals who try to spread messages of hate and that which you find repugnant/repulsive. Hold them to account. Just as you would offline at a social event. What they are posting is who they are here and in the physical space.

Melted snowflake says

I use FaceBook because that’s the way I connect with many of my friends; and…I agree with Woody and Richard 100%

Concerned Citizen says

@ Jogging Snowbird

Kind of ironic that you post about complaining and then complain about it at the same time. The nice thing about forums of this nature is it gives us common ground to voice opinions.

If you don’t like what you are reading no one is forcing you to read it or post a comment telling others to grow up.

In case you don’t follow the news Hate posts and cyber bullying lead to hate crimes and even suicide in some cases.

I think it’s a serious issue that needs to be address sooner rather than later. As I stated in my earlier post you may very well have freedom of speech protected by The First Amendment. If your words cause harm or death then you could and should be legally held responsible for what you post.

YankeeExPat says

As a kid I remember my Dad telling my Mom not to waste her time gossiping with other neighborhood ladies in the Deli section of the A& P grocery store. He would tell her that she and the other ladies were clucking like over excited chickens. A different time a different world I guess.

Jees…..Now I feel like an Old Fart!

Really says

All the more reason not to be on it.

Dave says

If you say something racist, hateful or evil online, that means you are racist hateful and evil in real life, there’s no difference between online and real life.

Sherry says

Excellent post Brad W! We should hold one another accountable to moral and societal standards of speech as well as behavior. Each one of us has a responsibility to use our influence to help craft a more healthy and just culture.

Our good nation once lead the way when it came to at least attempting to do the moral and “right” thing for everyone. We need to move away from the fear and hate that has become pervasive in our society, and return to the ethical thinking of those who wrote our constitution.

USA Lover says

Not sure why people are opposed to this when Facebook allow’s ISIS to recruit members,always has.

MS Auggie says

Theres a guy on FB who honestly says outright, that all gay people should be killed, his name is Theodore/Ted Shoebat, his Father Walid, feels the same way. I reported him numerous times, hes posting on Disqus Community NOW., Turning- news stories like the recent- Pensacola Married man who raped a 4 yr old,but, his wife, called the police when she found pics on his phone- into- “He was a gay man” Hes really sick & has a disturbing following.

MS Auggie says

The most dIsturbing FACT^, IS HE USES RELIGION to back up his opinions, saying, it is Gods will…

Mark says

Wow, you mean there are bad people in the world that say bad things. I wonder if there are any good people that say bad things sometimes? Who gets to judge what is bad? Why have a “freedom of speech” protection as our first right? Maybe since not one of us is perfect we shou;d just kill the entire human population! Let the first person without having ever said something bad fire the first bullet. Where have I heard that before?

Sherry says

There is absolutely nothing wrong with “being civilized”, being ethical, being truthful, being honest, being loving, being courteous, being kind. . . .

The idea that the “Freedom” to speak and be hateful and disgusting and lying and dangerous and racist, etc. is indicative of the decline of civilization. This is precisely what we are seeing in our political “theater” and in some of the comments here.

Anonymous says

Facebook serves no constructive purpose and just entices people to have their phones in their face more than they need to. So many people air their private business or just share vulgar stuff that amounts to nothing. If you have something to say, pick up the phone and call someone or go see them. Email works just fine. Facebook is a tool that we are blind to that is making certain individuals rich and collecting information behind our backs. It should be banned! If people worked as much as they stayed on Facebook we wouldn’t have so many people on government assistance and the unemployment rate would be at an all time low!