Even the formula’s name is beguiling: the value-added model, which sounds more like a form of consumption tax or something out the manufacturing or marketing sectors than anything to do with education. The words alone suggest that they apply to inanimate objects or “products” rather than human beings, let alone students or children, which may explain the tendency of teachers in Florida to recoil when they hear the words.

But that’s precisely what the words apply to. The Florida Department of Education developed the model and began applying it during the 2011-12 school year. It is designed to measure a teacher’s effectiveness. Most teachers in the state gets a VAM score. That score is based on the year-to-year performance of students on standardized tests, and on how those results reflect on a teachers. In essence, a student’s results largely determine a teacher’s “value,” regardless of that student’s social, economic or psychological circumstances.

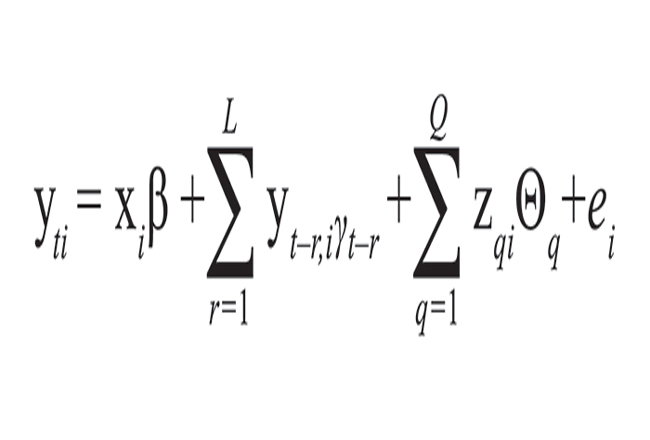

The formula is very controversial, because it relies on seemingly mathematical measures that are, in fact, premised on numerous arbitrary variables that may or may not have any real value in determining a teacher’s effectiveness. The state’s handling of the formula has also been controversial. The Department of Education refused to release the data that the formula generated, teacher by teacher—until the Florida Times-Union sued the department, arguing—correctly—that the data are a public record. The state lost the suit in November.

Monday, the Department of Education released masses of data in compliance with the court order, but it did so under protest.

“Because these data are intended to be used in conjunction with other information about classroom practice to form a complete evaluation, looking at this information in isolation can lead to misunderstandings about an individual teacher’s overall performance,” Kathy Hebda, chief of staff at the Department of Education, said Monday.

The Florida Education Association, the state’s teachers union, immediately criticized the data as “flawed,” as did many teachers and a Flagler County School Board member, but for varying reasons.

Criticism from most angles over a controversial measure of teacher effectiveness.

“Once again the state of Florida puts test scores above everything else in public education and once again it provides false data that misleads more than it informs,” Andy Ford, president of the union, said. “When will the DOE stop being beholden to flawed data and when will it start listening to the teachers, education staff professionals, administrators and parents of Florida?”

Ford said the rankings of teachers could not be taken too seriously because the numbers provided by the education department are based solely upon student test scores, in many cases ranking teachers on students they didn’t teach or on subjects they didn’t teach.

Flagler County School Board member Colleen Conklin has long been a critic of the evaluation formula. Last year she carried a copy of it and displayed it—or ridiculed it—on many occasions, including when Sen. John Thrasher visited the district for a day-long visit. She not only considers the formula flawed, but also the results that are being produced.

“Paying teachers on the success of their students sounds like a good idea,” Conklin said, likening the idea to an appealing sound byte. “However, it is no small task and is beyond complex. Children are not widgets cranked out in a factory line and our teachers are not factory workers. The data being released from the DOE is almost useless to reporters and the public. It’s raw data. It’s out of context and does not tell an accurate story. For example, there are inconsistencies in the data. Roster verification has to be done to ensure those students were actually on that teacher’s roster.”

The data is and will be consuming a lot of time from district staff (not just in Flagler, but across the state) to verify and correlate the data to the correct teachers.

Flagler County School Superintendent Jacob Oliva explained that the “Roster Verification” tool allows the district to remove students from the VAM calculations once it’s determined that those students have spent only a brief amount of time with a given teacher. To be counted in VAM calculations, students in year-long courses have to have been enrolled for the majority of both semesters, and have been counted at survey time in October and February. For semester-long courses, students have to have been enrolled at least 46 days. And for so-called wheel classes, students have to have been in that class at least 51 percent of the instructional time.

The approach itself of value-added calculations is flawed, Conklin said. “Instead of getting into the entire discussion about why utilizing a single test, on a single day is a bad idea I’d ask us to consider how disrespectful this move truly is to Florida’s teachers,” she said. “The Department of Education fought this in court and did not want to release this data because they know it doesn’t tell an accurate picture. Once again, it is a slap in the face to our teachers and students across Florida. They are so much more than one test, on one day. There has to be a better way.”

Oliva would not go so far as calling the data flawed, seeing in it just one among many measures of students’ “predicted growth.”

“One important component to remember with teacher evaluation is that these data are used in conjunction with deliberate practice,” Oliva said. “Looking at it in isolation can be confusing and misleading, and may not provide an accurate picture of overall teacher performance. Historically, we know that our schools are rated as highly effective and we our proud of our teacher performance.”

The teachers’ perspective is different. “Seventy percent of our teachers, prior to this school year, have been given VAM scores based on students and subjects they don’t teach,” Katie Hansen, president of the Flagler County Educators Association, the local teachers union, said. “Many teachers who, prior to this school year, were given ‘school score’ (a VAM score based upon the average learning gains on FCAT of every child at the school), are assigned a rating that has little to no correlation to the curriculum they are providing to students. Until the state of Florida can develop a valid and reliable system of evaluating teachers, this information should be treated for what it is – a flawed attempt to hold teachers accountable.”

Nancy N. says

Everyone consistently fails to mention in these discussions (probably because it’s politically incorrect) one of the biggest flaws of pegging teacher evaluations to test scores – it assumes that the kids are giving 100% effort on the tests. And frankly, a significant number of kids are just phoning it in because they know that their specific grade isn’t really significant to them, or they are even just marking random answers because they just don’t care.

A.S.F. says

If I were a teacher, I wouldn’t want to teach in a troubled school district on the basis that I might not be able to support my family, no matter how much I might want to contribute my talents to improving the lives of disadvantaged kids. I would be caught between my desire to contribute to those who might need me most in the classroom and those who need me to be making a decent living at home.

Summer says

How can a sixth grade science teacher be graded using the value added model when the formula is based on using only the math and reading scores? Is that science teacher supposed to drop science to teach reading and math to struggling students? I agree with A.S.F. that no teacher in his or her right mind is going to want to teach in a troubled school district. For the record, I do teach, and I have a -46 VAM score, based on students that I do not teach at all and on students with poor reading and math scores. Many science and social studies teachers have scores in the -40 or -50 range. This does not reflect on our teaching, but rather on the tool used to measure our teaching. The money spent on this system could have been put to better use.